Simple Attention Visualizer

Description

I have created a simple attention visualizer for transformer models. It is available at this link. It can

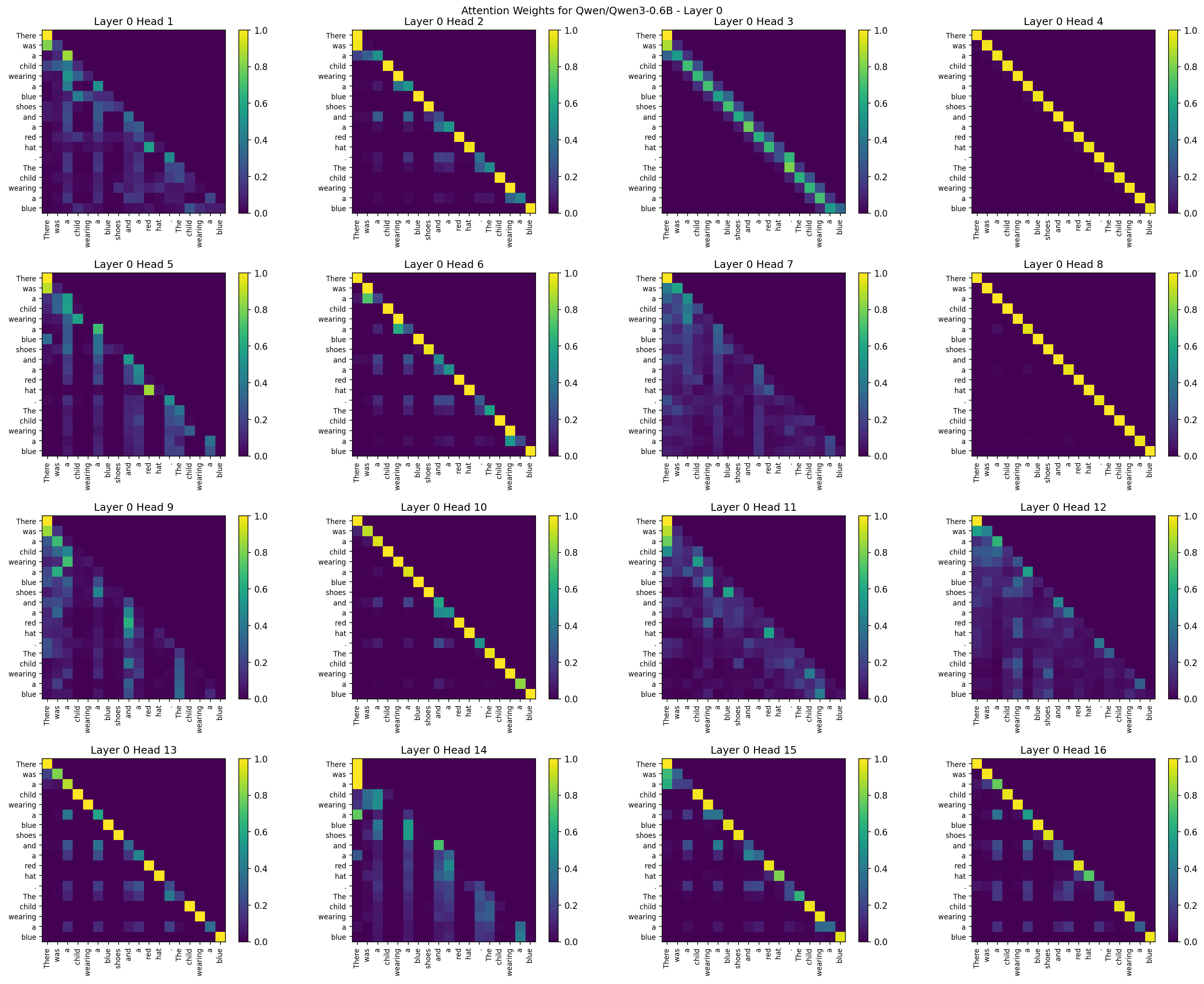

- Visualize all attention heads for a specific layer

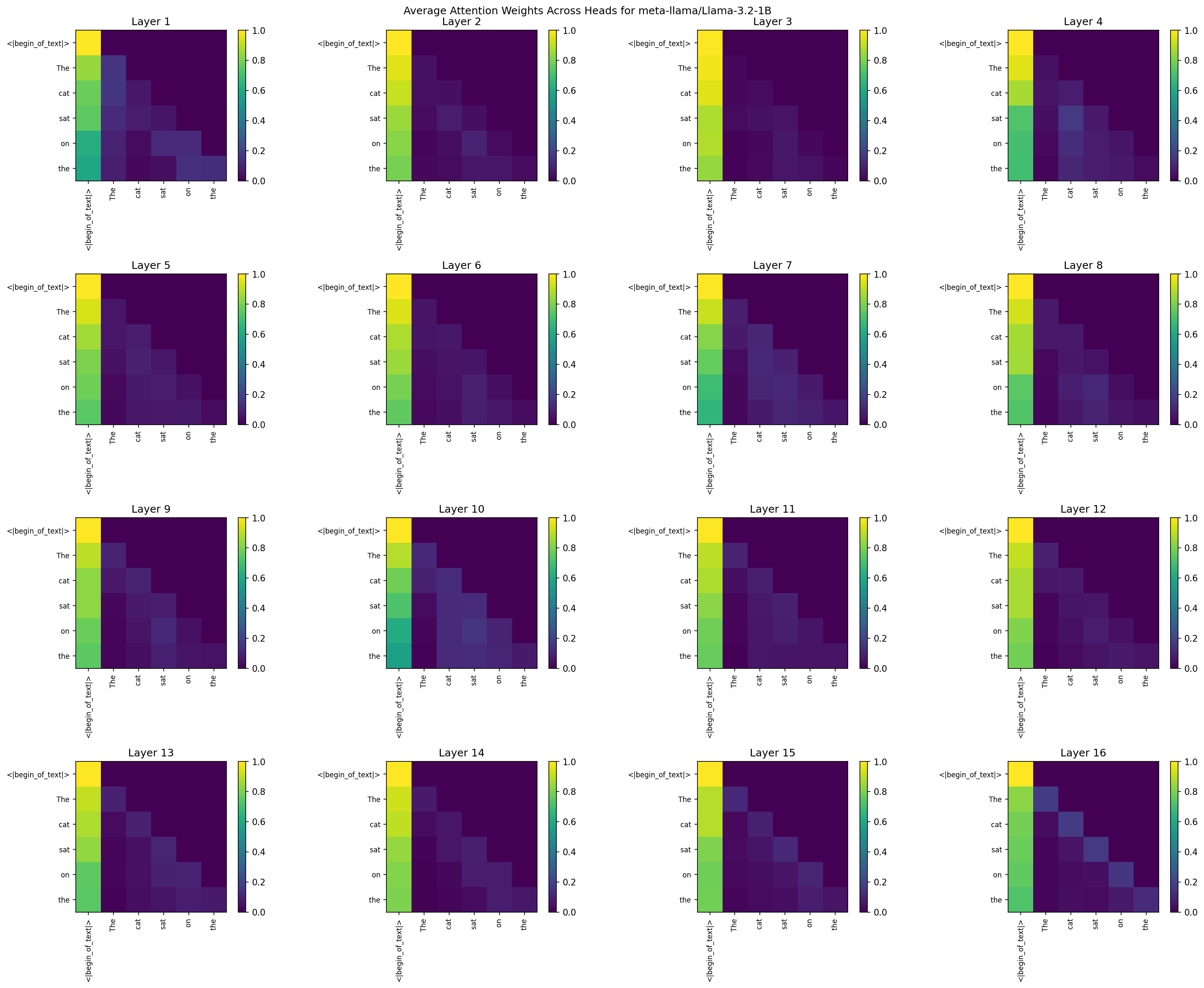

- Show average attention for each layer.

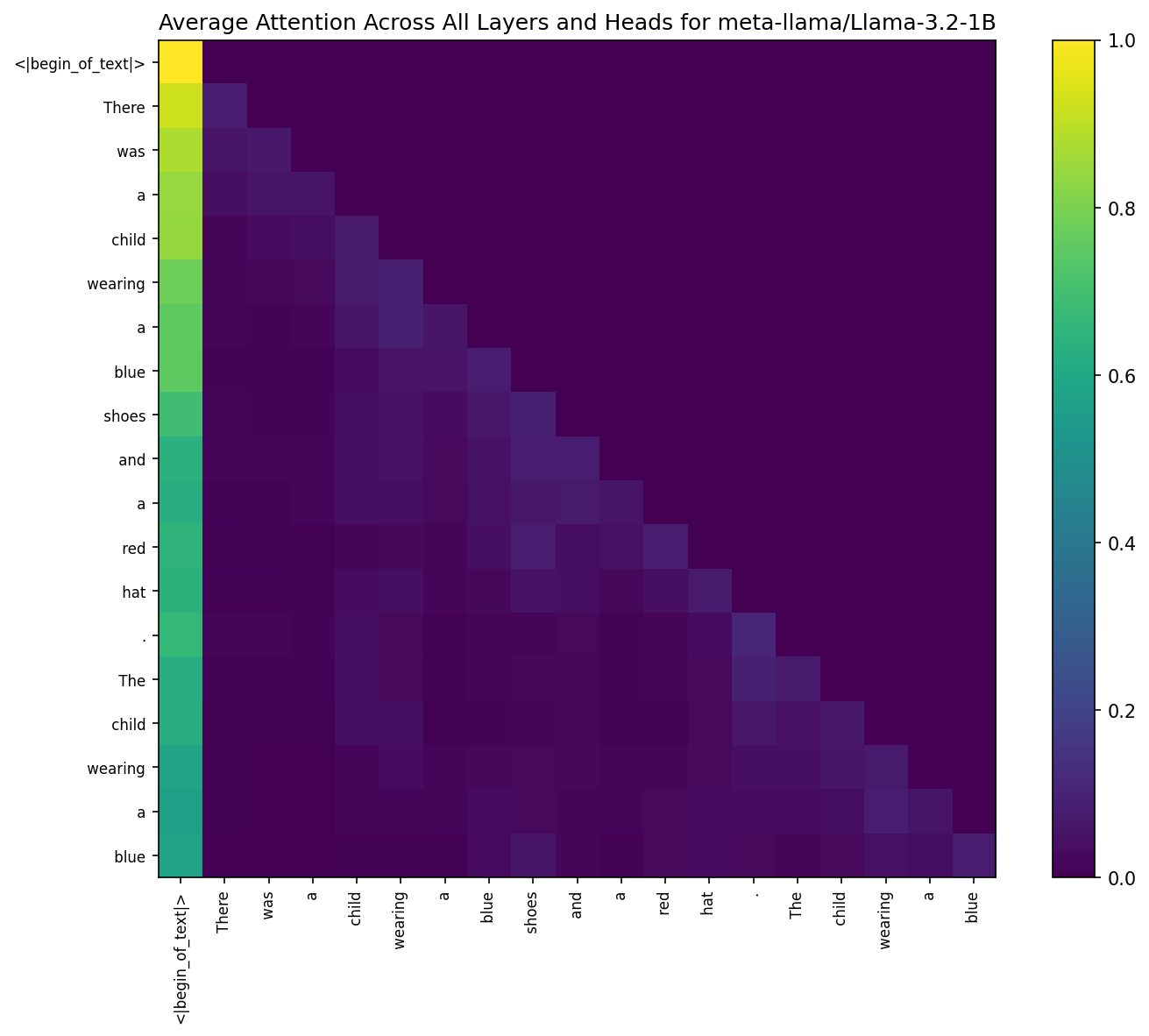

- Single heatmap averaging all layers and heads.

The code should work for any causal LLMs.

More Details in the Repository.

Visualization Examples

Enjoy Reading This Article?

Here are some more articles you might like to read next: